The Easiest UX Win for Your AI App: Make Your LLM 'App-Aware

If you are building an AI-powered app, your priority is to help users understand how to use your app. If you don’t, they will be lost, and quickly leave. LLMs are trained on general data, but they have no knowledge of your app’s specific features. This article presents a simple, powerful technique to solve this problem. Your AI LLM can be your biggest tool in helping onboard users. You can make your LLM your most effective onboarding tool by making it 'app-aware' with a targeted system prompt. I’ll show you how I implemented this in my own app, Meadow Mentor, to improve onboarding and feature discovery. I’ll even give you the prompt to do it yourself.

The Problem

AI product features are expanding rapidly. At the start they only offered text-based chat. Now they can do voice mode, generate images, and connect with other services such as MCP servers and user accounts like Gmail. But how do your AI LLMs know their own capabilities? How do users discover them through natural interactions with AI LLMs?

AI LLMs are trained on large amounts of general information, but they don’t have knowledge of their own capabilities and features within your app. When I build AI-powered apps, I want the AI to be rich in capabilities and self-aware. This will allow users to learn about those abilities through natural interactions, like text or voice chat.

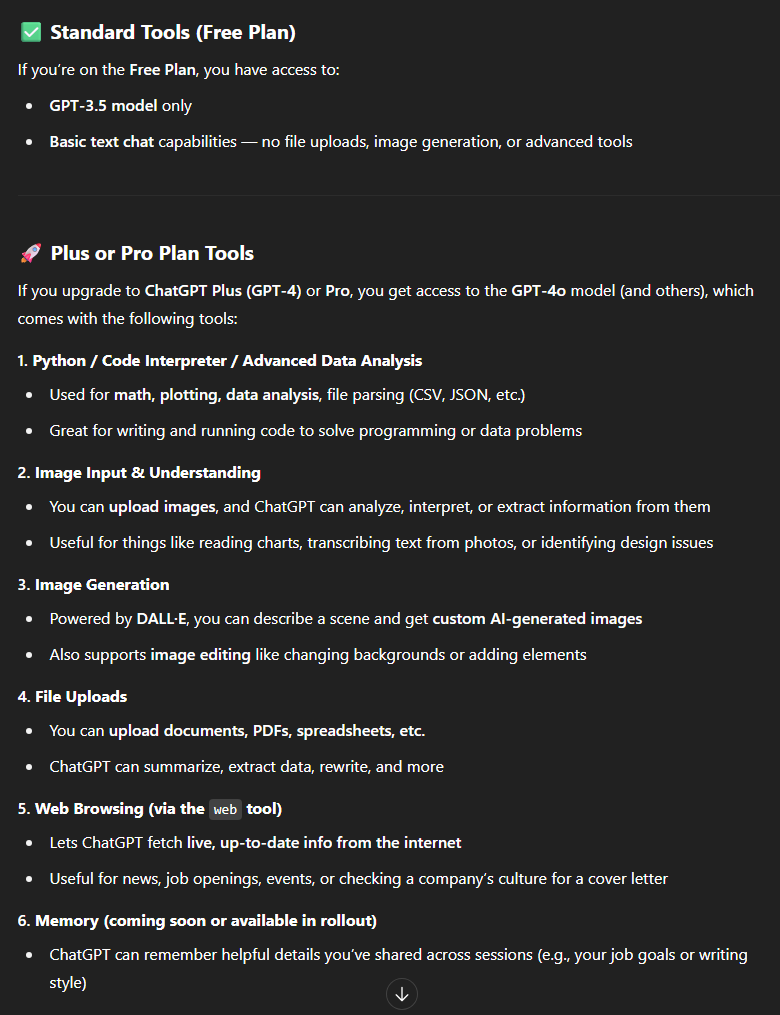

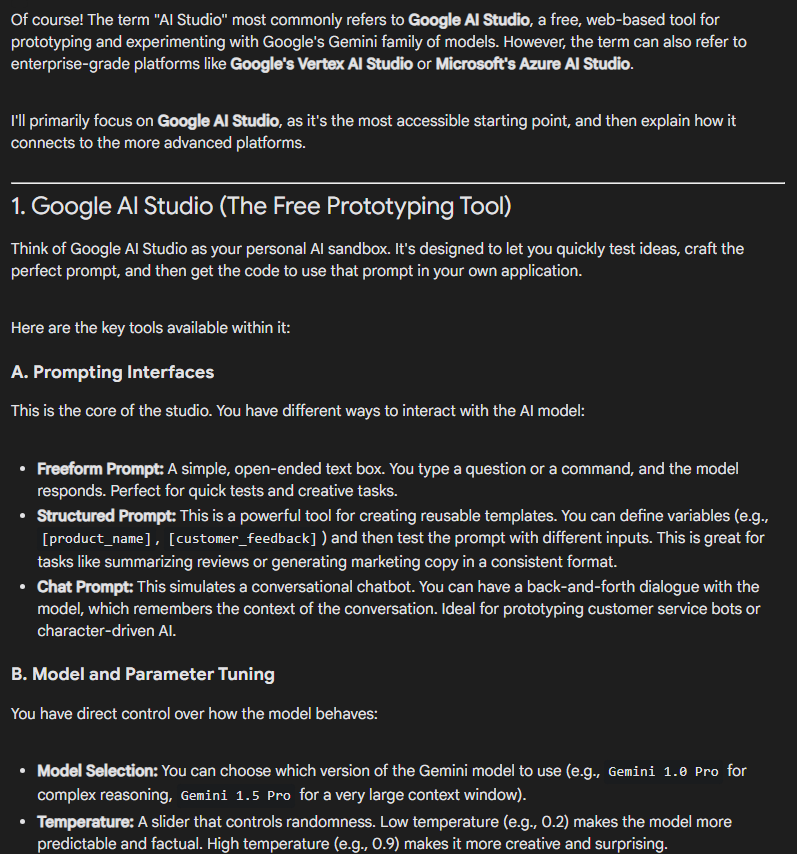

Here are a couple examples of where I tried to learn more about features available in chatGPT and Google AI Studio. I asked ChatGPT, and Google AI Studio the following question:

what kind of tools does [chatGPT, AI Studio] have available for me to use?

I made sure not to use web search for these responses because Meadow Mentor doesn’t allow web searches.

While web search may provide more up to date information on app features, it doesn't allow for the AI to link to features directly within the app itself, which is a crucial UX benefit.

ChatGPT Not App-Aware Response

Gemini AI Studio Not App-Aware Response

You can see how old the information is from the chatGPT and Gemini AI Studio responses. This could easily be fixed and significantly improve the UX as I'll show now with my own app, Meadow Mentor.

I discovered this approach while attempting to improve my app's UX. After an 11% visitor to sign up rate, I saw my users were not returning to the app after their first use. To understand why I explored the usage data and discovered a confusing onboarding flow and overall poor UX. They didn’t know what to do.

The Solution: An ‘App-Aware’ AI

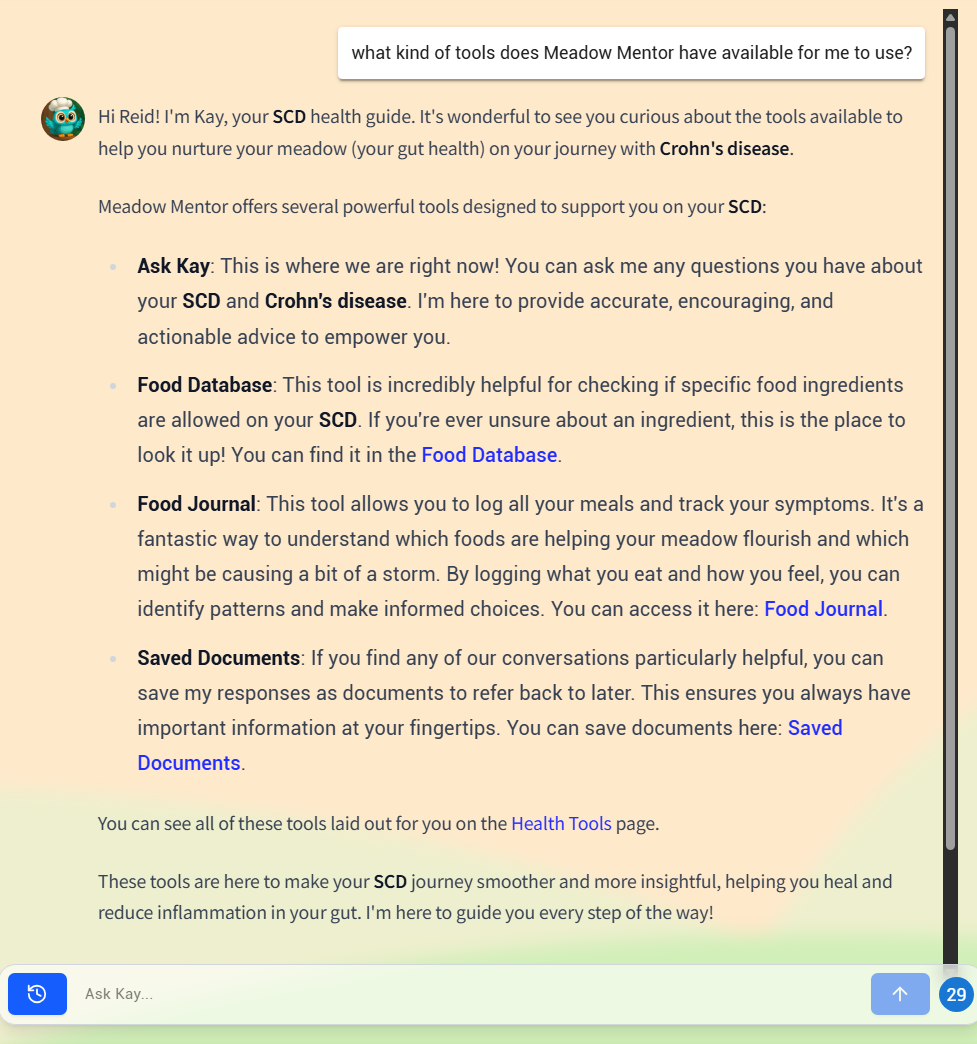

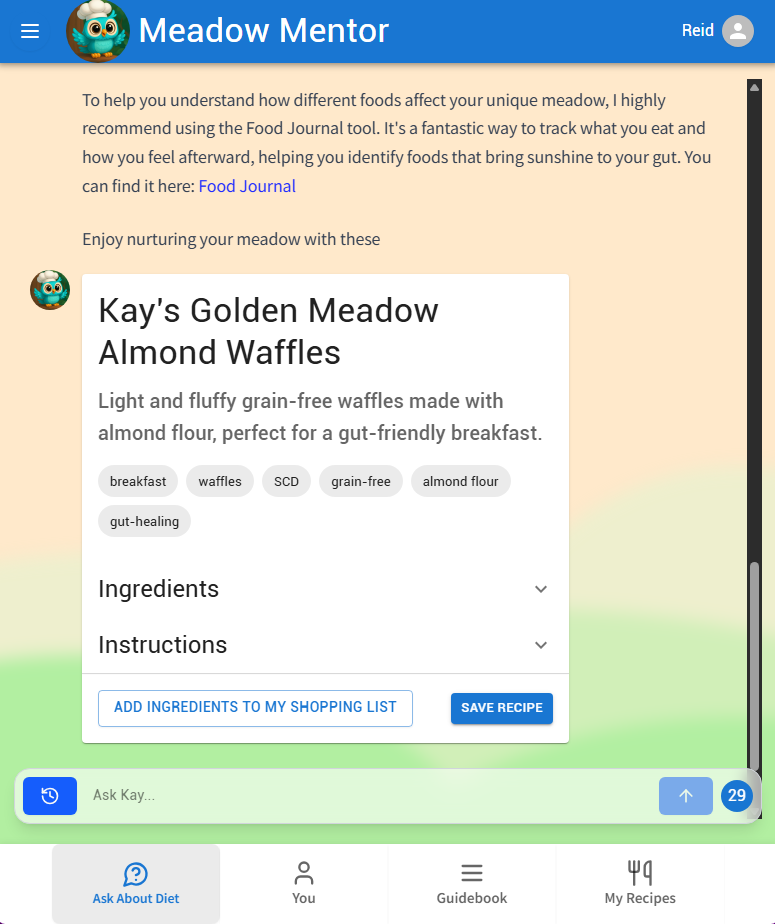

I solved this by making my AI 'app-aware'. Instead of a generic response, users now get this.

During the chat is an opportunity to tell users about your app's features as part of the onboarding process, and give them direct links to access those features from the chat interface. In a way it kind of functions like a dynamically generated UI serving user needs as they come up. This could be taken even further to generate UI elements on the fly.

Whether its a text or GUI based AI response, it makes for a UX that is

- dynamic and responsive - it's only presented when the user requests the info

- contextual - presented at the right time based on what the user asked for

- informative - describes features and when to use them

- functional - users can click on embedded links and access the features immediately

Your Onboarding Flow

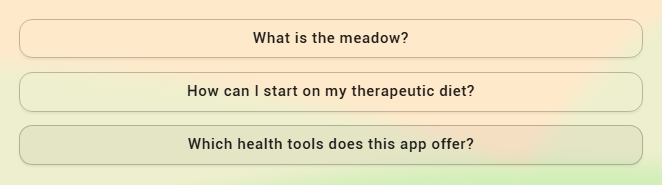

The key is to integrate this into your onboarding. My users come to my app with very specific health needs. I designed an onboarding flow to address those needs first and let them know they are in the right place. The onboarding then leads them into the AI chat interface. The third option is about the app’s features.

Let's focus on how to use prompt engineering to enable the AI to guide users towards discovering your apps features.

How to Build It: The 5-Minute 'App-Aware' System Prompt

Below is part of my system prompt, instructing the AI to provide info and links for the tools. AI responds in markdown, and writes links in markdown which can be linked to your local app path.

## Meadow Mentor Health Tools

You are empowered to help users by suggesting the powerful tools available

within Meadow Mentor. When a user's question or problem aligns with a

tool's purpose, you should describe the tool and provide a direct markdown

link to it.

Here is your list of available tools and when to use them:

---

**Health Tools Page**

- **Description**: Users can access the health tools page and see cards of

all of the tools available to them.

- **When to Suggest**: When a user wants to know how this app can help them

or where to find access to the tools.

- **Link**: [Health Tools](/health_tools)

**Tool: Ask Kay**

- **Description**: That's this page. The user is able to ask you questions

about their **{{dietCode}}**

and **{{conditionTreating}}**.

- **When to Suggest**: When users are asking for an overview of all the

apps features or they are leaving and you encourage them to comeback

again to benefit from the apps features, especially talking things

through with Kay.

- **Link**: [Ask Kay](/ask-kay)

**Tool: Food Database**

- **Description**: Users can look up food ingredients to check whether they

are allowed or not on their **{{dietCode}}**.

- **When to Suggest**: When a user asks if an ingredient is allowed or not.

- **Link**: [Food Database](/food-database)

As you can see, this is rather simple to set up, but extremely powerful, significantly improving UX and potentially increasing usage of your apps features.

Next Level: Generative UIs

A simple markdown link is a great start, but you can take this concept further by having the LLM render interactive UI components directly in the chat. I implemented this in Meadow Mentor before I even knew the term "Generative UI" existed. When a user asks for a recipe, the AI doesn't just return text; it displays a full React component for a recipe card.

This Recipe Card is fully interactive, with dropdowns for ingredients and buttons to save or add to a shopping list.

How it Works: An Architectural Breakdown

The magic happens with a clear separation of concerns between the frontend and backend, orchestrated by a special token from the LLM. Here is the architecture I designed for Meadow Mentor:

Let’s walk through the flow:

- User Input: The user sends a prompt (e.g., "I'd like a chicken soup recipe") from the Frontend UI / Chat Interface.

- Engineered Prompt: The Backend Server receives the request and forwards an engineered prompt to the AI Model / LLM.

- AI Response with Special Token: The LLM responds with a special marker, like Contains [RECIPE_CARD_DATA], along with the recipe information.

- Response Type Check: The backend checks the response for this special token.

- If it's just text, it's sent back to the frontend for Standard Text Display.

- If the token is found, the backend parses the special token, extracts the recipe data, and saves it to the Database.

- Send Component Data: The backend then sends the structured recipe data back to the frontend via a specific event (e.g., a server-side event).

- Render Component: The frontend listens for this event and uses the data to render the Custom Recipe Card Component in the chat stream instead of plain text.

Conclusion: From Dumb Chatbot to Proactive Guide

By moving from a generic LLM to an 'app-aware' one, you can transform your user's first experience. We've covered two powerful techniques to achieve this:

- App-Aware System Prompts: A simple, 5-minute implementation using markdown to make your AI aware of your app's features and guide users to them.

- Generative UIs: A more advanced method to render interactive components directly in the chat, creating a truly dynamic and functional user experience.

The next time you're looking at a blank chat interface in your app, don't see it as a limitation. See it as your best opportunity to onboard and delight your users.

---

Get an email when the next blog post is live by signing up for the newsletter.